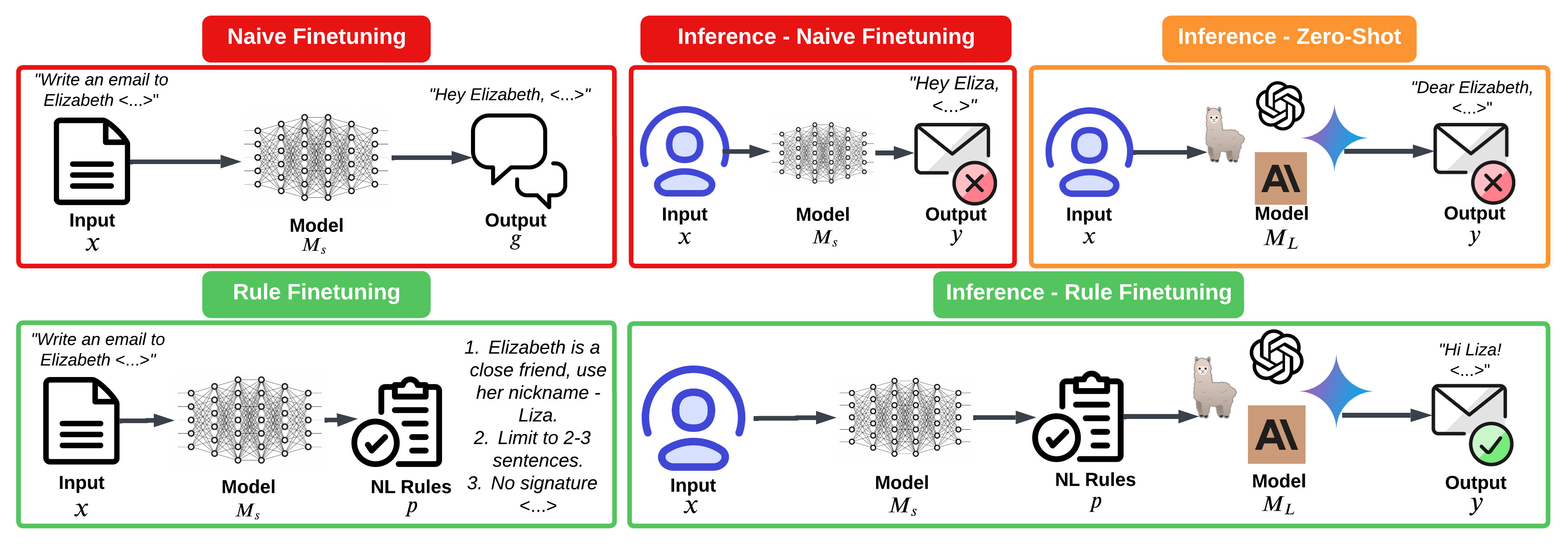

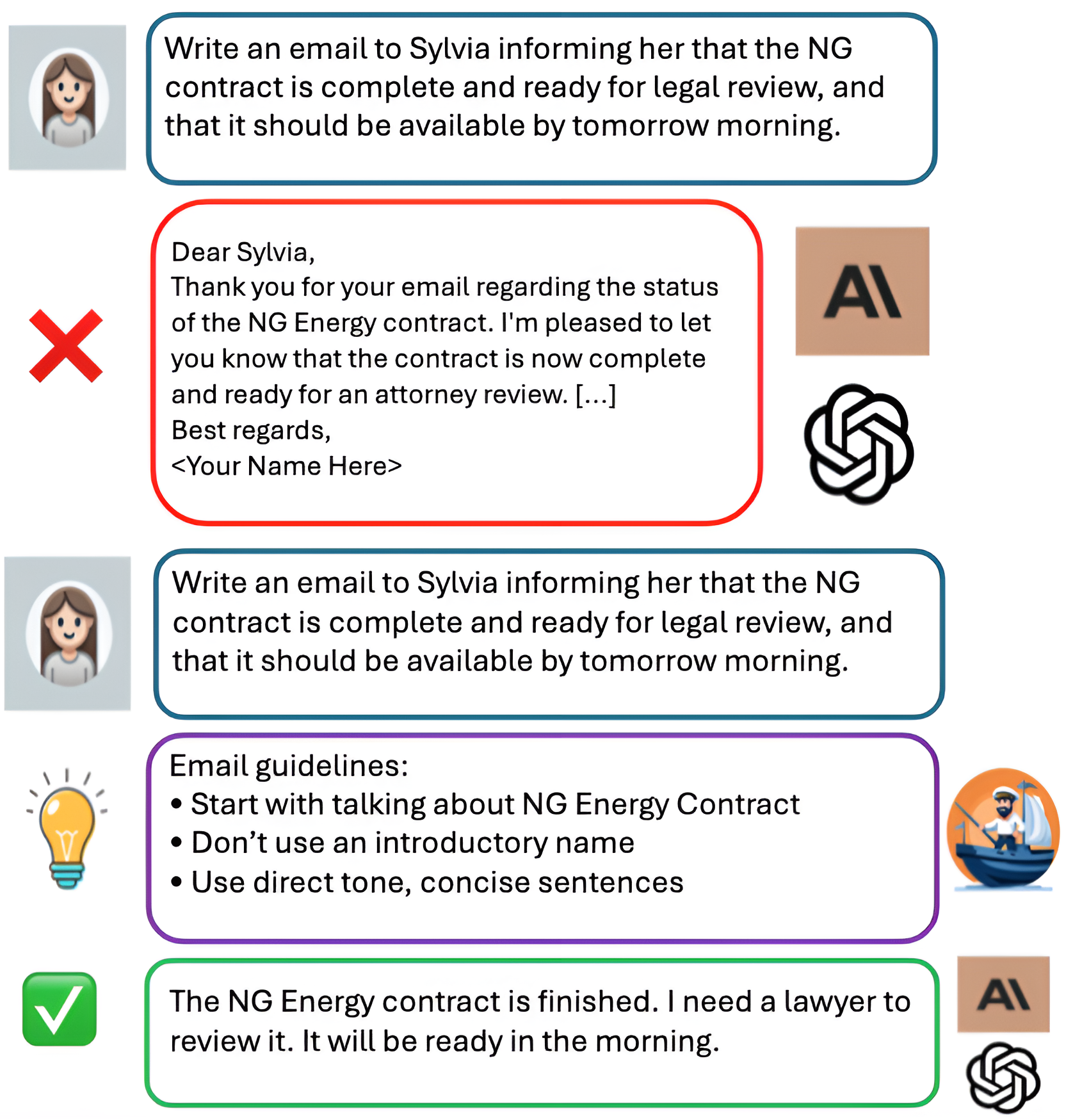

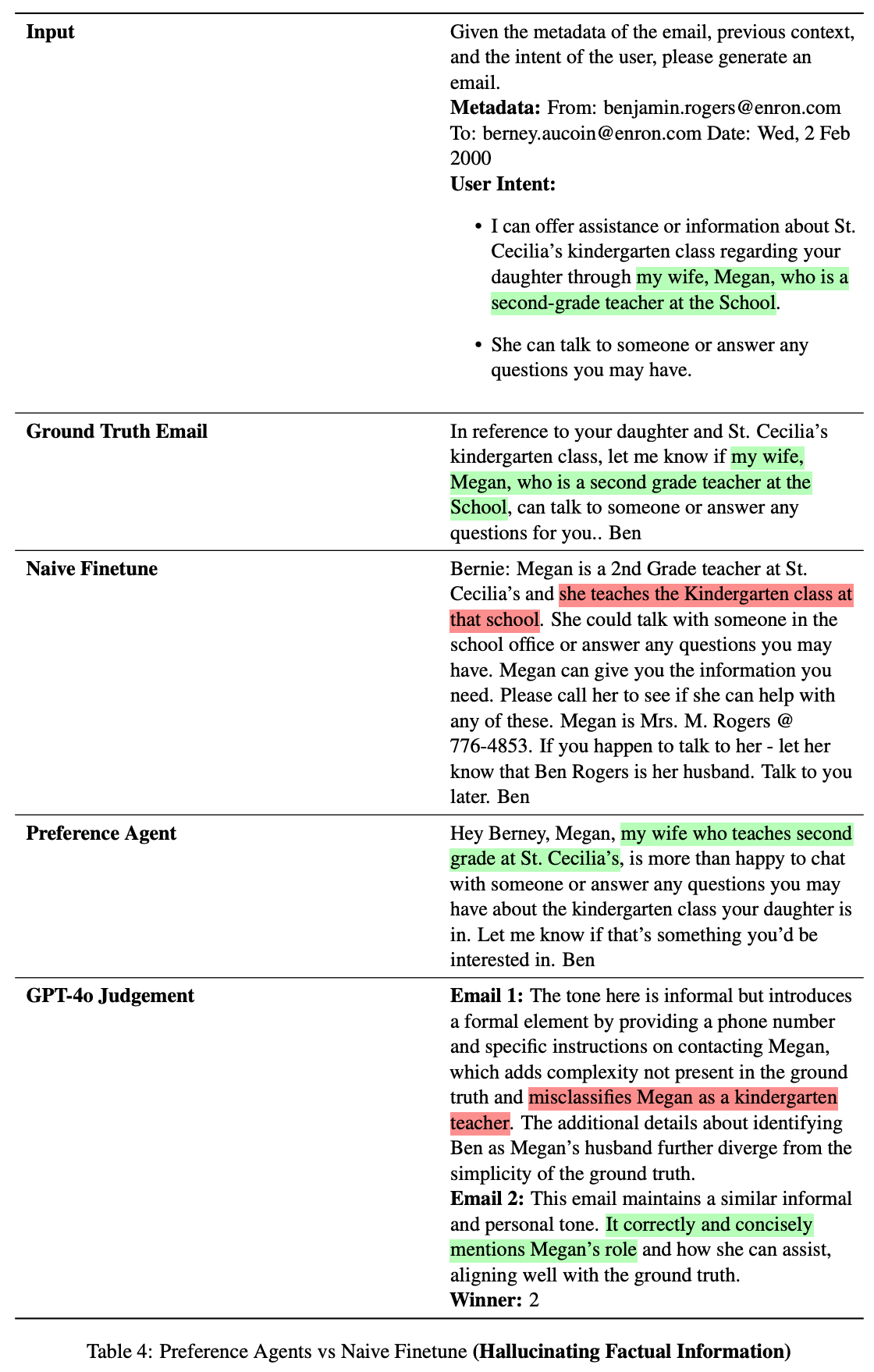

Large language models demonstrate impressive reasoning abilities but struggle to provide personalized content due to their lack of individual user preference information. Existing methods, such as in-context learning and parameter-efficient fine-tuning, fall short in capturing the complexity of human preferences, especially given the small, personal datasets individuals possess. In this paper, we propose a novel approach utilizing small parameter models as preference agents to generate natural language rules that guide a larger, pre-trained model, enabling efficient personalization. Our method involves a small, local "steering wheel" model that directs the outputs of a much larger foundation model, producing content tailored to an individual’s preferences while leveraging the extensive knowledge and capabilities of the large model. Importantly, this personalization is achieved without the need to fine-tune the large model. Experimental results demonstrate that our technique significantly outperforms baseline personalization methods. By allowing foundation models to adapt to individual preferences in a data and compute-efficient manner, our approach paves the way for highly personalized language model applications.

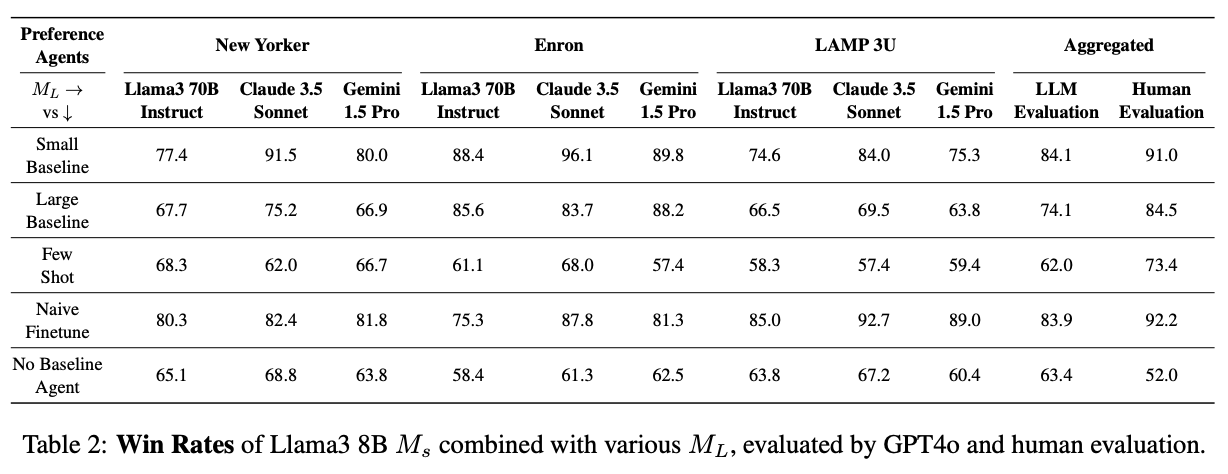

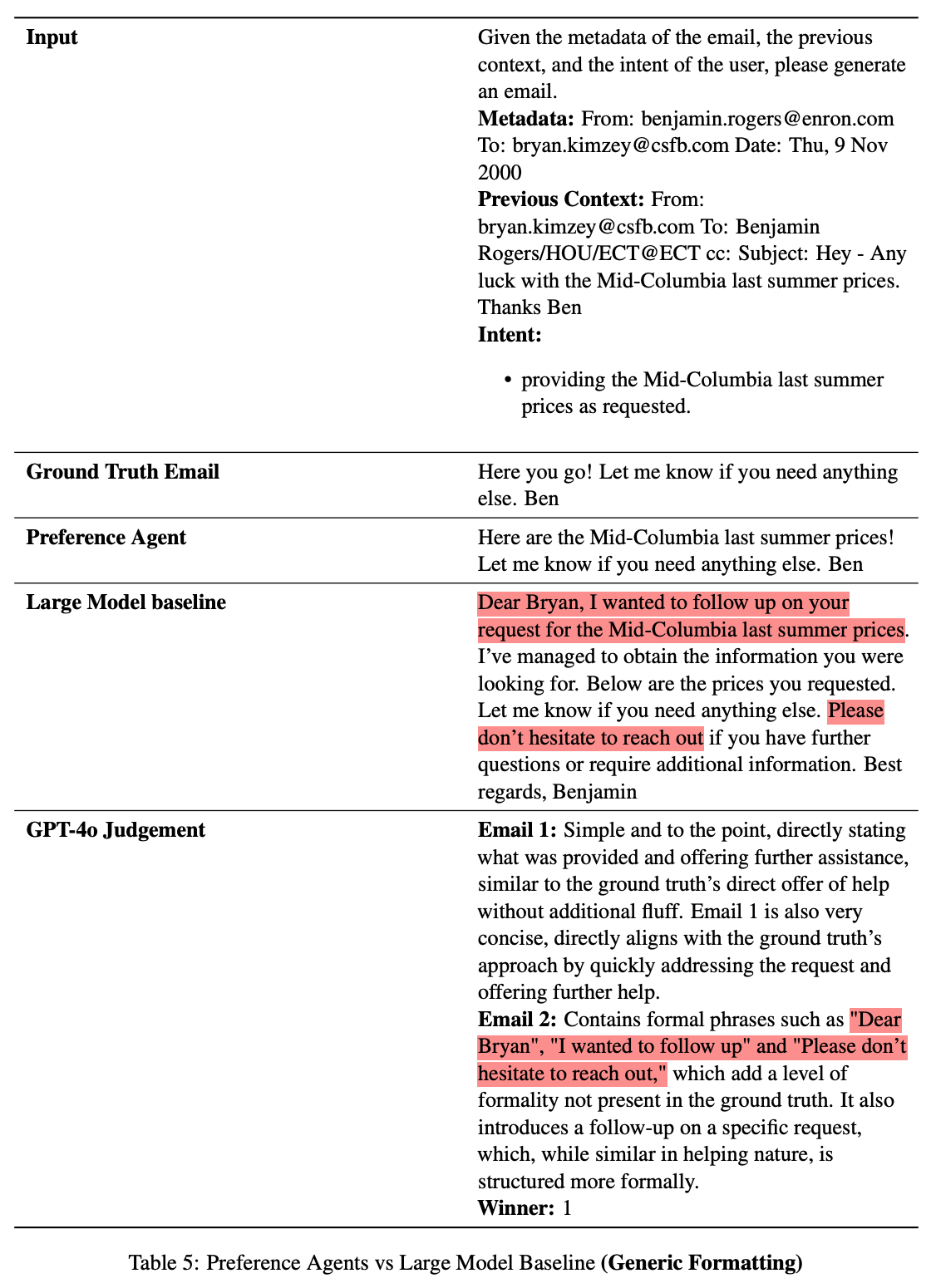

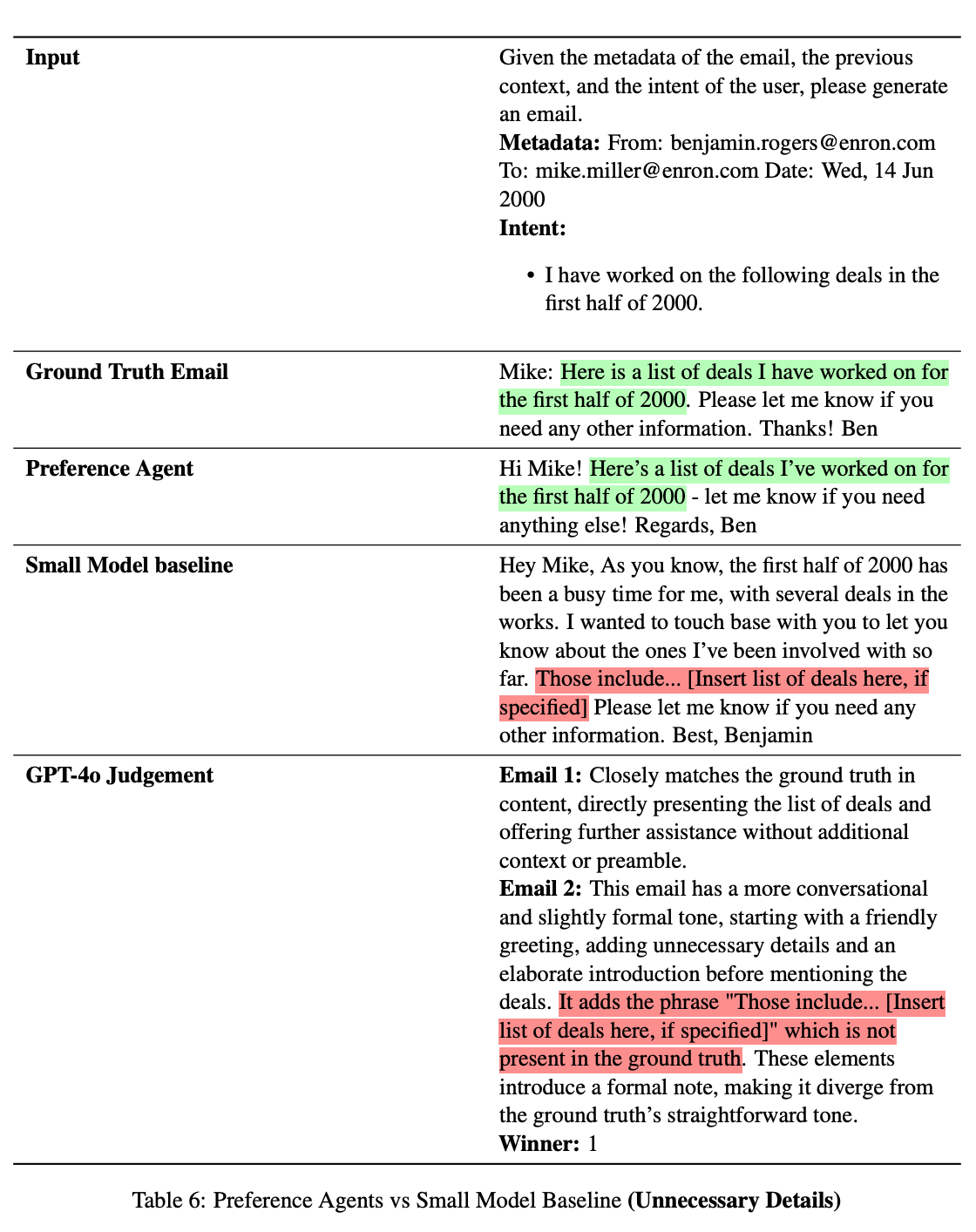

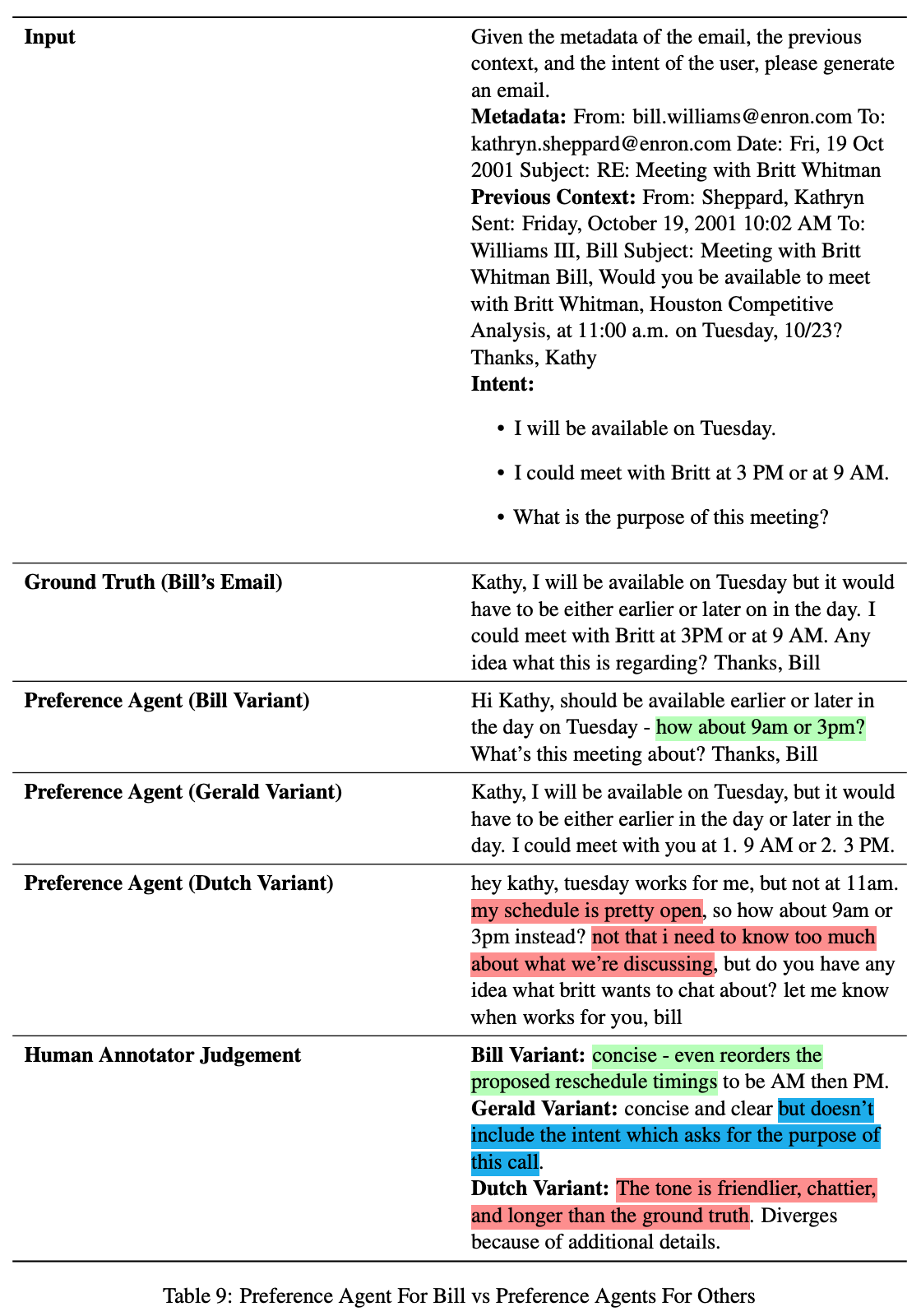

We evaluated our preference agents against several baselines across three datasets (Short Form: Enron-42K, Medium Form: LAMP 3U, Long Form: New Yorker) using three different large language models (Llama3 70B, Claude 3.5, Gemini 1.5 Pro) as our large model. Alongside our human evaluation scores, we also utilize GPT-4o for LLM evaluation. Our method demonstrates strong performance:

@misc{shashidhar2024unsupervisedhumanpreferencelearning,

title={Unsupervised Human Preference Learning},

author={Sumuk Shashidhar and Abhinav Chinta and Vaibhav Sahai and Dilek Hakkani-Tür},

year={2024},

eprint={2410.03731},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2410.03731},

}